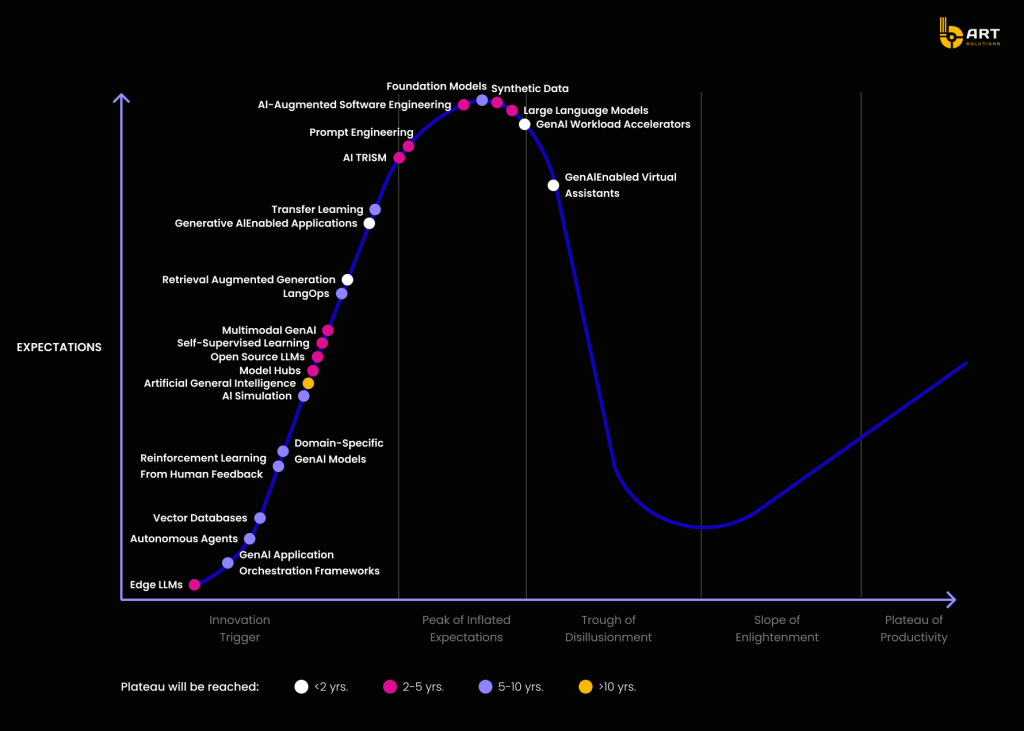

According to Gartner, by 2026 more than 80 % of businesses will adopt some form of generative AI. In response IT companies are shaping their spendings around AI: Gartner also projects a 42.4 % surge in data center investment driven by AI workloads.

In McKinsey’s State of AI survey C‑suite respondents say their companies aren’t yet seeing measurable gains in profit from generative AI. The difference between adoption and real, sustainable outcomes makes most projects stumble.

Python, with its rich ecosystem of machine learning libraries and community-driven tooling, has cemented its role as the core language for AI development. PyTorch for deep learning, TensorFlow for model pipelines, or FastAPI for lightweight inference APIs, the Python stack is deeply embedded in enterprise AI workflows, so it surpassed JavaScript as the most used language on GitHub.

Framework selection affects everything from GPU utilization to deployment speed and long-term team velocity.

Meta’s internal adoption of PyTorch (which now powers the majority of its AI models) is driven not simply by performance, but by clean API design and ease of experimentation at scale.

TensorFlow, backed by Google, offers a mature ecosystem for enterprise-grade training and inference pipelines where production reproducibility and deployment tooling (like TFX or TensorFlow Serving) are priorities.

Python frameworks like FastAPI have gained traction for exposing models as lightweight, async-ready APIs, used widely due to their performance parity with Node.js and Go for many AI inference workloads.

Together, these frameworks form a powerful toolset that unlocks rapid prototyping and flexible design, but also comes with a snowball of potential hurdles.

Navigating these challenges takes experience, get in touch to find help.

To sum it up: companies are leaning hard into AI, expectations are sky‑high, and Python sits in the middle of it. But what looks perfectly well on paper may fail real systems: performance drops, integration breaks, and deployment becomes a challenge.

This article takes a closer look at all the nuances of building enterprise-ready AI applications with Python-based frameworks. We’ll break down the common technical difficulties, integration roadblocks, and deployment pitfalls, and suggest strategies for delivering AI systems that work.

Key challenges in using Python for AI development

Python and its ecosystem weren’t designed for high-performance, scalable, maintainable systems, and that’s exactly what enterprise AI deployment demands.

Let’s break down the most common technical roadblocks and what you can actually do about them.

Performance bottlenecks in Python-based Machine Learning

Python’s dynamic nature and the Global Interpreter Lock (GIL) often become bottlenecks in AI frameworks when building high-concurrency systems for real-time inference.

A benchmark testing PyTorch on large batch image classification found that default DataLoader settings yielded only 34% GPU utilization, while optimized setups (using alternatives like SPDL) pushed that up to 75%, essentially doubling throughput during training.

In enterprise AI solutions, inference latency is closely tied to user experience and revenue. Sluggish APIs can block downstream systems, delay automation, or create compliance risks in regulated environments.

Workaround: Where possible, offload compute-heavy logic to Numba or Cython, and export models to ONNX Runtime or TensorRT for optimized serving. Adopting FastAPI allows async, low-latency endpoints suitable for high-volume AI performance optimization.

Scalability issues across multi-GPU and cloud environments

Prototyping a model is one thing; scaling it is another. As teams move toward multi-GPU AI deployment or hybrid cloud pipelines, Python’s architectural assumptions start to crack.

Distributed training introduces synchronization challenges, inconsistent memory usage, and non-deterministic failures that are difficult to debug. Internal NVIDIA profiles show that PyTorch in production configurations may underutilize GPUs by as much as 40%, due to unoptimized batch balancing and communication delays across nodes.

In most cases, you’ll need to pair Python with a serving layer that supports autoscaling, caching, and resource isolation, which are not baked into core Python libraries.

Workaround: Use libraries like PyTorch Lightning, Horovod, or DeepSpeed to abstract distributed training logic. For model inference, containerize APIs with TorchServe, and adopt cloud AI integration strategies via Kubernetes or Azure ML to manage infrastructure scalability.

Dependency and compatibility pitfalls in production environments

One of the weakest points in Python-based machine learning projects is environment inconsistency. Different dev teams, CI pipelines, and staging environments often run slightly mismatched versions of NumPy, TensorFlow, or CUDA drivers, causing critical failures.

Businesses often rely on legacy systems, including .NET, Java, or older ERP platforms that Python does not integrate with natively. Serialization issues, mismatched encodings, or incompatible protocols can cause silent failures that only surface under load.

Workaround: Lock down environments using conda-lock, Docker, or Nix, and set up automated reproducibility checks. For integration, export models to ONNX to enable Python AI interoperability across tech stacks. Use gRPC or REST as bridging layers and enforce schema validation to reduce runtime edge cases.

Deployment complexity slows down delivery

A recent Stack Overflow poll found that 37% of data teams cite deployment as the hardest part of the AI lifecycle.

For teams working in regulated industries like fintech or healthcare, things are even tougher. Compliance often requires container-level reproducibility, rollback support, and end-to-end audit trails. And when Python applications grow past the prototype phase, bloated Docker images, flaky installs, and ambiguous error messages turn into serious blockers.

Workaround: Use slim, GPU-ready base images from NVIDIA and adopt AI app containerization best practices from day one. Tools like MLflow and Azure Machine Learning pipelines help standardize ML model production workflows, manage versioning, and control deployment across environments.

Monitoring, drift, and maintenance risks

In enterprise AI deployment, production models are constantly exposed to drift because of new user behaviors, changing data distributions, or silent regressions. A survey by Arize AI found that 61% of companies had experienced model performance degradation that went undetected for weeks.

Python, on its own, doesn’t help here. Logging and observability in many AI application development projects are treated as an afterthought, with no real-time monitoring, input logging, or anomaly tracking in place. This creates accuracy risks and liability in sectors like finance, healthcare, or legal tech.

Workaround: Implement full-stack AI workflow management with tools like Neptune.ai, Weights & Biases, or MLflow. Track not just metrics, but distributions, feature drift, and model lineage. Build dashboards around AI monitoring and performance metrics to catch problems before they impact users.

Integration and interoperability considerations

To create real business impact, AI-based apps must plug into the broader operational landscape like CRMs, ERPs, analytics platforms, or legacy infrastructure. This is where many Python-based models stumble: they’re powerful in isolation but fragile in production.

Why Python models struggle in enterprise ecosystems

The majority of enterprise systems aren’t written in Python. Critical workflows usually rely on Java or .NET. Data is stored in structured formats across databases, data warehouses, and ERP/CRM systems, which expect strict schemas, authentication layers, and deterministic behaviors. Python’s loose typing and flexibility clash with these expectations.

Python AI frameworks like PyTorch or TensorFlow support fast experimentation, but deploying those models into real production pipelines involving cloud-native services or legacy business logic requires careful engineering.

Patterns for scale

To enable robust enterprise AI integration, teams should architect Python services with interoperability in mind. The three most effective patterns are:

1. Expose models as microservices

Wrapping AI logic in a FastAPI or Flask application and exposing it via REST or gRPC is the first step. This decouples the Python runtime from the rest of the stack, allowing C#, Java, or front-end clients to consume predictions through a clean API.

To ensure scalability and fault tolerance, these services should be deployed in containers (e.g., Docker) and orchestrated using tools like Kubernetes or Azure ML. Include health checks, versioned endpoints, and input validation layers to stabilize the interface.

Microservice approach supports Python AI interoperability without forcing other teams to learn or embed Python in their workflows.

2. Use messaging and event-driven design

Messaging systems like Kafka, RabbitMQ, or Azure Service Bus allow Python-based AI models to consume events, make predictions, and publish results in near real-time without tight coupling.

This pattern supports data pipelines that span teams and languages. For example, a sales forecasting model in Python might listen to Kafka topics populated by a Java-based order processing system and publish output to a queue consumed by a .NET-based dashboard.

Event-driven architecture enables cloud AI integration strategies that scale naturally, handle backpressure, and avoid API bottlenecks.

3. Serialize models for direct interop

For cases where real-time API calls are too slow or costly, consider exporting Python-trained models into portable formats like ONNX or PMML. These formats allow models to be loaded natively in Java or .NET environments eliminating the Python runtime entirely.

This is very useful in edge or embedded environments where Python isn’t viable or budgets are tight. ONNX supports execution on multiple backends (CPU, GPU, even FPGA) using runtimes like ONNX Runtime or OpenVINO.

Use ONNX when you need to bridge Python-based machine learning with lower-level or embedded systems without sacrificing performance.

Best practices for enterprise-grade interoperability

To ensure long-term reliability and reduce integration debt, consider adopting the following engineering practices:

Design for loose coupling

Avoid hardcoding data paths, environment variables, or internal logic. Expose clear interfaces using OpenAPI (for REST) or Protocol Buffers (for gRPC). Treat your AI model like a product: it should have a stable, documented, and versioned interface.

This allows teams working in other languages or platforms to use AI outputs without depending on Python internals, key for long-term AI workflow management.

Enforce schema contracts

Use tools like Pydantic (with FastAPI) to validate request/response structures. Pair that with upstream contract testing (e.g., Postman collections, gRPC reflection tests) to prevent integration breakage.

This reduces the risk of mismatched data types or payload structures when connecting to systems like enterprise databases or CRMs.

Monitor inputs and outputs

Log the full context: input payloads, feature sets, user IDs, timestamps, and downstream response times. This supports debugging and AI monitoring and performance metrics tracking.

When things break or degrade, you’ll need this telemetry to trace failures across systems.

Version everything

Every model release should include a unique version tag, tied to specific data, training code, and dependencies. Keep changelogs, and implement backward-compatible endpoints where possible.

This is especially important when integrating AI into long-lived ERP systems or government-regulated environments where auditability is a must.

Separate data access from inference

Avoid embedding database access logic directly in the model-serving code. Instead, use dedicated data APIs or message queues to handle data ingestion. This reduces blast radius, improves reuse, and aligns with AI model deployment best practices.

Keeping data and inference layers decoupled allows your Python AI frameworks to stay focused on generating predictions.

When AI application development is done in isolation, it creates silos. By implementing these patterns, teams can ensure that Python-based AI becomes a stable, scalable component of the larger architecture.

Real-world case: Amazon Ads

When Amazon Ads needed to moderate millions of images, videos and texts daily, they turned to Python-based machine learning to build models capable of real‑time content compliance checks. The team chose PyTorch, one of the most widely adopted frameworks, for its flexibility and dynamic graph execution, allowing researchers to iterate quickly on NLP and computer vision architectures.

But that was only half the battle. The real challenge lay in enterprise AI deployment: scaling inference across regions, integrating with Amazon’s existing ad delivery pipelines, and keeping latency low enough to make automated decisions in milliseconds.

Training and optimisation

Models for image and video analysis were fine‑tuned from pretrained architectures in torchvision, while NLP models used transfer learning to process ad copy. Training ran on distributed clusters using PyTorch’s Distributed Data Parallel feature and SageMaker pipelines. This setup allowed the data science team to apply AI workflow management principles: versioning datasets, logging experiments, and tuning hyperparameters without disrupting the production stack.

Production‑grade serving with TorchServe

Amazon Ads wrapped models using TorchServe, an official serving framework for PyTorch to solve th challenges. TorchServe exposed the models as REST endpoints, handled multi‑model deployment, autoscaling, health checks, and built‑in metrics. This was critical for AI model deployment best practices such as version control, rollback, and monitoring latency at P90/P99.

This API‑driven approach also solved a major problem of AI integration with legacy systems: downstream services written in Java and .NET could consume predictions through stable HTTP/gRPC interfaces without embedding Python.

Hardware acceleration for cost and latency

Even with TorchServe, GPU‑based inference was costly. By compiling TorchScript models for AWS Inferentia chips using the Neuron SDK, the team achieved a 71 % reduction in inference cost and a 30 % improvement in latency compared to equivalent GPU deployments. This combination of TorchServe and hardware acceleration illustrates how Python AI performance optimization isn’t just about code, it’s about aligning the entire stack to the workload.

Integration and interoperability

Because ad moderation sits in the middle of Amazon’s advertising pipeline, predictions had to flow into multiple downstream systems: storage, auditing, user dashboards, and compliance tools. By exposing the model through APIs and message queues, Amazon Ads implemented a decoupled architecture that let enterprise AI solutions integrate with existing services while still allowing the ML team to iterate quickly on the Python side.

Lessons for your stack

- Use Python AI frameworks for training and experimentation, but wrap them in production‑grade serving layers like TorchServe to achieve interoperability with other languages and services.

- Follow strict AI model deployment best practices: version endpoints, implement health checks, and collect monitoring and performance metrics at every stage.

- Don’t stop at GPUs, hardware‑specific compilers like Neuron or ONNX Runtime can cut costs and improve latency dramatically.

- Treat integration as a first‑class problem. Message queues, schema contracts, and microservices will save you when you scale.

bART Solutions project: Research software for healthcare domain

In a healthcare R&D setting, we developed a platform that helped researchers structure experimental results, track hypotheses, and automate routine documentation. The system combined rule-based logic with Python-based machine learning components to extract key information from unstructured lab notes and generate structured reports.

To ensure smooth integration with legacy systems, the platform was embedded directly into existing internal databases and lab infrastructure.

Strategic recommendations

Based on real-world cases like Amazon Ads and our hands-on development insights, bART Solutions team put together recommendations that separate fragile prototypes from future-proof AI systems.

1. Match frameworks to your business

Choosing the right toolset isn’t just a tech decision, it’s a business one. Python AI frameworks like PyTorch or TensorFlow are ideal for experimentation, rapid iteration, and deep customization. But they come with tradeoffs when it comes to application scalability, production hardening, and long-term maintainability.

If your use case requires custom model architectures and complex pipelines (e.g. NLP + vision fusion), PyTorch is often the better fit due to its flexibility. If you’re prioritizing reproducibility and scalable pipelines, TensorFlow with TFX or KubeFlow may offer more structure.

Before committing to a framework, assess not just model performance, but the cost of onboarding, deployment tooling, compatibility with your CI/CD, and Python AI interoperability with the rest of your stack.

2. Containerize everything, cloud-native from day one

Use Docker with reproducible builds and pinned dependencies. For AI app containerization, start with base images optimized for CUDA (for GPU workloads), or slim Alpine-based builds for CPU inference. Use tools like Docker Compose or Helm to define services, resources, and dependencies clearly.

Pair containers with cloud AI integration strategies through Kubernetes, Azure ML, SageMaker or Vertex AI to enable autoscaling, rolling updates, and hardware abstraction. This ensures your AI services scale with traffic and can survive restarts, crashes, and updates without manual intervention.

Design for zero-downtime deployment, rolling updates, and node-level resource quotas. Run chaos drills to test how your model infrastructure behaves under pressure.

3. Monitor what matters

Traditional app metrics like CPU, memory, and uptime aren’t enough for AI. You need domain-specific AI monitoring and performance metrics: model latency at P90/P99, prediction drift, feature skew, missing values, and confidence score anomalies.

Use tools like MLflow, Neptune.ai, Arize AI, or Weights & Biases to log training runs, hyperparameters, and real-time production metrics. Set up dashboards that correlate performance with model versions, data slices, and even upstream data quality.

Treat AI workflow management like DevOps: automate observability, alerting, rollback, and validation. This isn’t about perfection—it’s about having the insight to respond when your model starts degrading.

Monitor the full model lifecycle, from data ingestion to inference using structured logging and analytics that integrate with your observability stack (e.g. Prometheus, Grafana, Azure Monitor).

4. Design for future-proofing

Most Python-based models don’t live forever in Python. Over time, models will need to interoperate with legacy systems, third-party APIs, business dashboards, and different programming languages often without warning.

To avoid rewriting everything later, use ONNX or PMML to export trained models into language-agnostic formats that can run on .NET, Java, or cloud-native runtimes like Triton. Define your input/output formats using schemas (OpenAPI, Protobuf, Avro), and treat your models as black boxes exposed through versioned APIs.

More importantly, enterprise AI solutions must prepare for change. Designing for AI model lifecycle management means you’re ready to upgrade, patch, or replace parts of the system without breaking it.

Separate your training logic from deployment infrastructure. Architect APIs and message flows that can survive model rewrites, framework changes, or upstream schema drift.

Conclusion

The reality of AI application development in enterprises is that performance, integration, and lifecycle management decide whether your model becomes a core capability or just another abandoned proof of concept.

Amazon Ads scaled Python-based machine learning beyond the lab: using PyTorch for flexible model development, TorchServe for production-grade serving, hardware-specific runtimes for cost and latency gains, and clean architectural boundaries for AI interoperability.

Choosing the right AI frameworks matters, but it’s only the start. Containerization, observability, schema contracts, and AI model lifecycle management are what allow AI systems to survive scale, audits, and inevitable change. Without them, even the best models collapse under real-world pressure.

This is the real work of enterprise AI deployment: designing systems that integrate with legacy tools, survive version drift, scale under unpredictable loads, and keep delivering measurable value over time.

Ready to turn your Python stack into a stable, scalable foundation for growth?

Get in touch, and let’s do IT!