The landscape of artificial intelligence has rapidly matured in 2025, and Azure AI is positioned at the forefront of this transformation. As of this year, Microsoft has expanded its Azure AI Foundry catalog to include over 1,900 models, spanning foundation, reasoning, domain-specific, multimodal, and small language models. Notably, even Elon Musk’s Grok 3 model family is now available through the Azure platform, marking a major expansion in cross-vendor model interoperability.

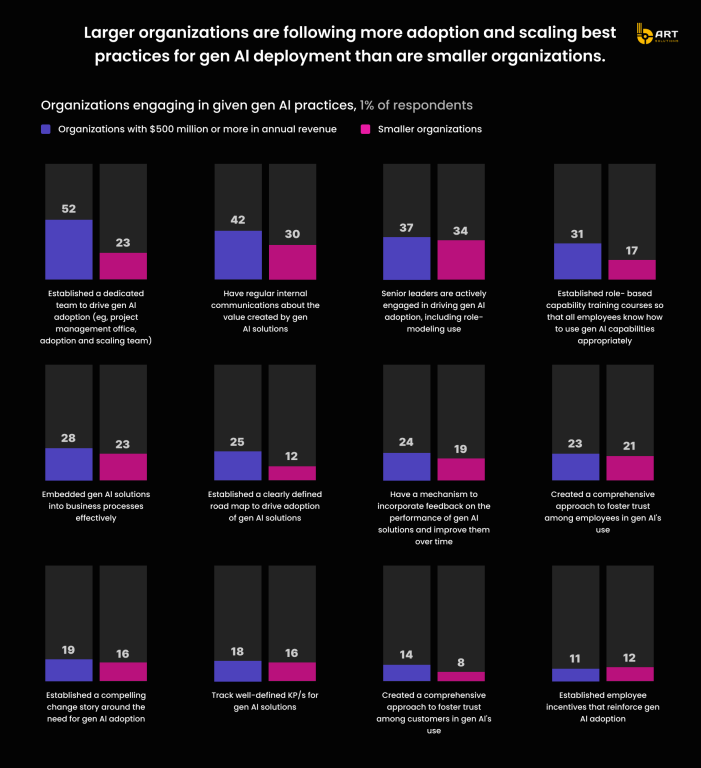

This shift reflects a broader market evolution: AI integration has become a strategic cornerstone for digital-first enterprises. According to McKinsey’s 2025 report on enterprise AI adoption, over 75% of large organizations are now using generative AI in at least one business function, up from 65% just a year earlier. Furthermore, businesses that integrate AI deeply into workflows are reporting higher returns on digital transformation investments.

The significance of this trend cannot be overstated. For enterprises built on cloud-native infrastructure, the combination of Azure AI 2025, Copilot Tuning, and tools like Azure AI Search and Agentic AI development kits allows them to build, deploy, and govern advanced AI at production scale. Gartner predicts that by the end of 2025, over 60% of enterprise applications will embed AI agents, many powered through platforms like Azure OpenAI or orchestrated through Multi-Agent Orchestration frameworks using Microsoft’s tooling.

In this blog, we’ll explore what makes the Azure AI 2025 stack uniquely powerful, from its core services and advanced features to real-world implementation insights and security frameworks. Let’s dive in!

Core Azure AI services

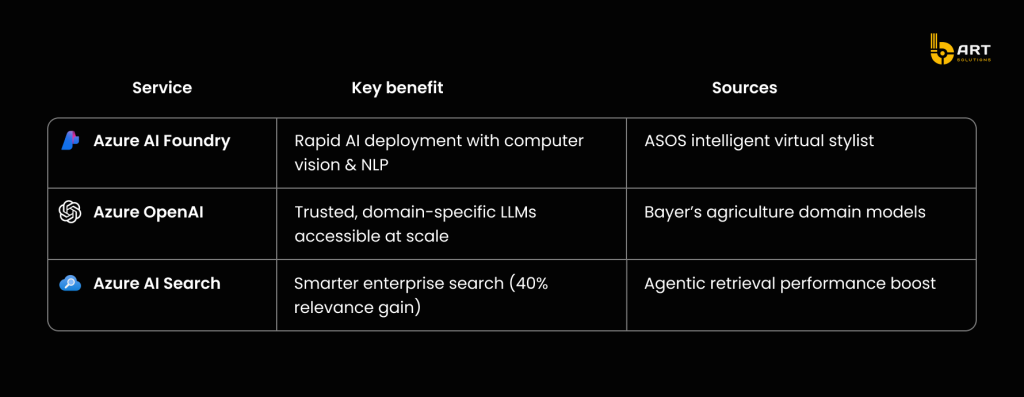

As enterprise AI adoption accelerates, Microsoft has strategically expanded its Azure AI 2025 ecosystem to meet the diverse needs of modern companies from model experimentation to full-scale deployment. This section outlines three cornerstone services that form the operational foundation of AI-powered transformation in Azure: Azure AI Foundry, Azure OpenAI, and Azure AI Search. Each addresses a critical stage of the AI lifecycle while aligning with Microsoft’s broader infrastructure stack, including Azure Fabric, Microsoft Entra ID, and GitHub Copilot.

Azure AI Foundry

Azure AI Foundry is a newly launched unified platform for model development and deployment. It is designed to support teams working across the entire AI value chain: from data scientists prototyping models to DevOps engineers scaling production pipelines. The platform integrates data preprocessing, model training, deployment orchestration, and observability tooling, helping reduce friction between teams and toolsets.

At the heart of Foundry’s value is its native integration with over 1,900 models, including GPT‑5 and Grok 3, which can be deployed directly or fine-tuned using proprietary data. Models span multiple domains: language, vision, speech, code, and time-series forecasting allowing teams to select best-fit models for their vertical.

Foundry also aligns with Microsoft’s AI governance framework, supporting integrated policy enforcement, data compliance workflows, and Azure AI Security protocols at every step. This helps ensure enterprise workloads meet internal and regulatory requirements from day one.

- Real-world impact: ASOS, a leading online fashion retailer, leveraged Azure AI Foundry to quickly build and deploy an intelligent virtual stylist using NLP and computer vision enhancing customer engagement and personalization while accelerating time-to-value.

Azure OpenAI

With Azure OpenAI, Microsoft extends access to leading foundation models like GPT‑5 and Codex through secure, scalable APIs hosted on Azure infrastructure. Unlike public OpenAI endpoints, these deployments live in customer-specific Azure regions, enabling sensitive data processing within known compliance boundaries (e.g., HIPAA, GDPR).

What makes Azure OpenAI particularly powerful is Copilot Tuning. This feature allows organizations to customize LLM behavior using internal documentation, workflows, and terminology, all without retraining the model from scratch. It’s a low-barrier path to building AI agents that reflect domain-specific knowledge.

- Real-world impact: Bayer partnered with Microsoft to create and monetize industry-specific models optimized for agronomy and crop protection. These fine-tuned models are now available via Azure’s model catalog, offering tailored insights for AgTech users illustrating CRM customization in Microsoft Dynamics and domain-specific realism.

Microsoft has also released SDKs and templates to help teams onboard quickly, along with usage analytics to track and optimize model performance across business units.

Azure AI Search

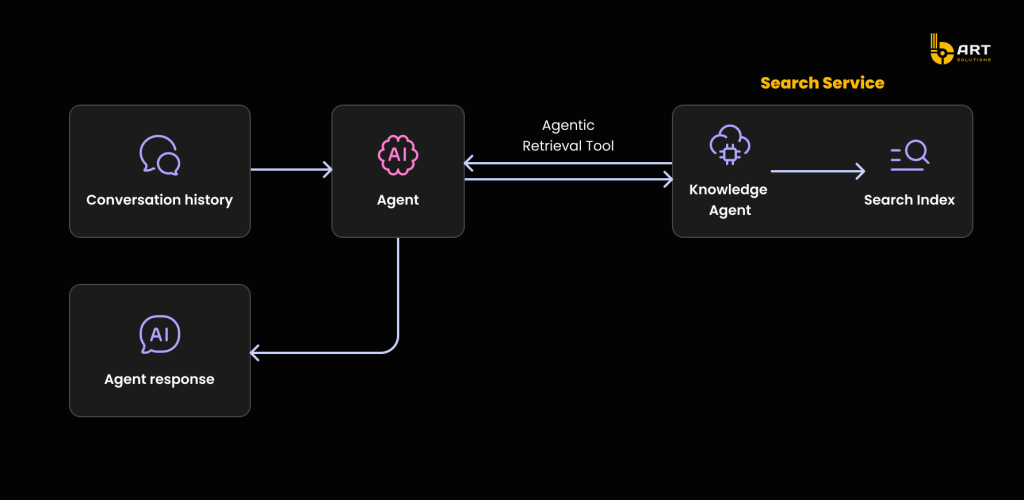

Originally built as a cognitive search engine, Azure AI Search now supports agentic retrieval. Unlike traditional search which returns static results, agentic retrieval enables context-aware interactions that pull and combine information across multiple systems, including SharePoint, Cosmos DB, and Microsoft Fabric.

A blueprint from Microsoft shows how to develop retrieval pipelines with Azure AI Search, tied into Azure AI Foundry agents for full RAG workflows.

Performance insight: Microsoft reports this model-driven retrieval delivers up to 40% more relevant answers in conversational AI scenarios, leveraging intent-aware multistage query execution.

Key capabilities include:

- Hybrid vector + keyword search for high-accuracy document retrieval;

- Real-time indexing from enterprise data sources (CRM, ERP, knowledge bases);

- Plug-and-play connectors for Azure OpenAI, enabling grounded chat experiences.

This unlocks sophisticated use cases such as:

- Automatically answering legal compliance queries using documentation stored in SharePoint;

- Summarizing deal status by pulling opportunity data from both Salesforce and Microsoft Dynamics 365 simultaneously;

- Supporting HR teams with AI assistants that retrieve employee policy details across disparate databases.

Advanced AI сapabilities

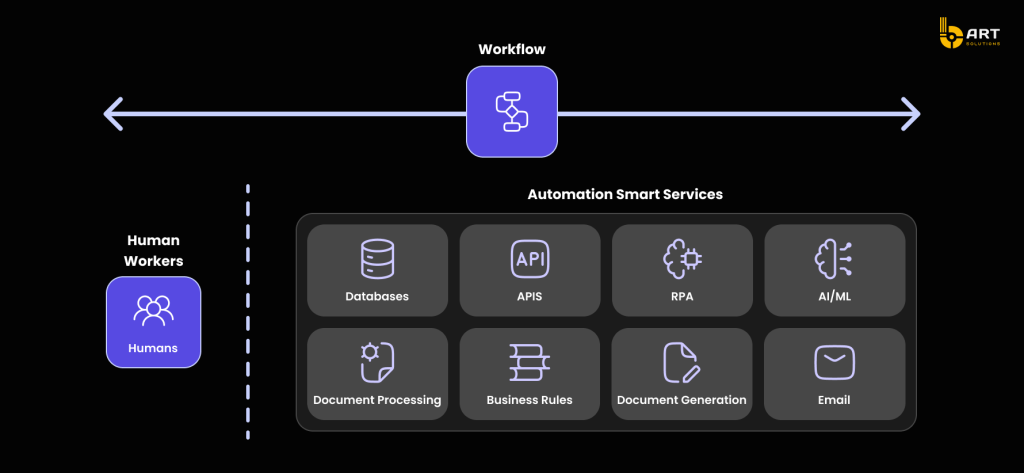

As businesses become more AI matured, they demand capabilities beyond simple prompt-response models. Enterprise use cases involve long-running tasks, memory, coordination between systems, and behavior fine-tuned to domain-specific requirements. In 2025, Azure AI meets these demands through a set of advanced capabilities: Agentic AI, multi-agent orchestration, and flexible fine-tuning and customization frameworks. They became the backbone of scalable, intelligent workflows across cloud and hybrid infrastructures.

Agentic AI for autonomous workflows

Agentic AI represents models that don’t just return responses, but take autonomous actions, access tools, and follow multi-step instructions. Azure AI 2025 supports the development of such agents via integrations with OpenAI, Azure AI Foundry, and toolkits like Semantic Kernel and LangChain.

These autonomous agents can be deployed for different use cases:

- Software engineering: In Microsoft’s own infrastructure, GitHub’s Copilot Agents now manage build tasks, generate documentation, refactor codebases, and perform security scans. These agents don’t operate in isolation, they interact with code repositories, issue trackers, and test environments.

- Business operations: In business, early adopters are using Azure-based agents to process and validate information by reading emails, parsing attachments, and logging respective records in CRM and ERP systems without human oversight.

The key to agentic systems is their ability to access memory, tools, and external APIs. Through Azure AI Search, agents can perform agentic retrieval, using structured context from enterprise data lakes or internal wikis to inform their decisions. Microsoft’s commitment to AI interoperability also ensures these agents can operate across hybrid systems using REST APIs or Microsoft Power Platform connectors.

Multi-agent orchestration

For workflows too complex for a single agent, multi-agent orchestration is the way to go. Azure provides native support for orchestrating multiple specialized agents each responsible for part of the task with built-in coordination mechanisms and communication protocols.

For example, in a multi-agent system deployed via Azure AI, one agent might extract structured information from lengthy documents using GPT‑5, another cross-checks that information against internal policies or datasets accessed through Azure AI Search, and a third agent compiles the findings into a summary or recommended action plan, which is formatted using familiar Microsoft tools like Word or Excel. Each of these agents operates asynchronously and independently, coordinated by a planner module built using open interoperability standards such as LangChain or Semantic Kernel’s planner abstraction. This architecture enables efficient handling of multi-step workflows without human intervention, while preserving transparency and control over each agent’s role.

Azure’s architecture allows these orchestrated agents to span cloud, edge, and even on-prem systems integrated with Microsoft Fabric, Power Automate, and Azure Integration Services.

Such multi-agent setups are ideal for:

- Financial auditing (document extraction + rules compliance + report generation);

- IT service management (incident triage + diagnostic agents + patch scheduling);

- Sales and marketing ops (lead routing + data enrichment + campaign drafting).

Fine-tuning & customization

Enterprise success with LLMs often hinges on customization. While base models like GPT‑5 provide a strong foundation, they may lack the industry-specific vocabulary, tone, or operational alignment needed in regulated environments. Azure addresses this with layered fine-tuning options, now natively supported through Azure AI Foundry.

Azure supports both:

- Parameter-efficient fine-tuning methods like LoRA/QLoRA, allowing teams to adapt massive models without needing GPU-intensive full retraining

- Preference-based alignment using Direct Preference Optimization (DPO), a method growing popular among companies that need human-centric behavioral shaping

For teams that don’t have in-house ML experts, Copilot Tuning offers a low-code way to guide model behavior. This capability enables non-tech people to provide example inputs/outputs and refine model tone or compliance boundaries without writing training loops.

Typical applications include:

- Healthcare: fine-tuning models to recognize specialty-specific terminology in radiology or oncology

- Customer service: customizing language tone and escalation behavior for enterprise-specific support tiers

- Finance: aligning model behavior to industry regulations (e.g., GDPR, FINRA) through soft rule compliance

The developer-friendly tiers of Azure’s tuning infrastructure allow experimentation in isolated test environments before deployment to production, ensuring safety and stability.

With agentic AI to handle tasks, multi-agent orchestration to scale complexity, and fine-tuning to align with business goals, Azure AI positions itself in 2025 as a strategic cornerstone for enterprise AI adoption.

Integration with Azure ecosystem

In 2025, Azure AI demonstrates its full power when deployed within the broader Microsoft ecosystem through Microsoft Fabric, Azure Cosmos DB, and GitHub Copilot. These platforms act as the operational nervous system for scalable AI-powered enterprise transformation.

Microsoft Fabric for unified data foundation

Microsoft Fabric is the central platform for data integration in business environments. It combines data engineering, warehousing, real-time analytics, and AI application development into a single SaaS environment. For businesses building AI models on Azure AI Foundry or deploying custom GPT-5 pipelines, Fabric ensures consistent access to clean, contextualized, and timely data.

Key capabilities include:

- Native support for Cosmos DB: This enables real-time ingestion and querying of high-throughput operational data for AI model inference. When connected to digital twin builders, real-time telemetry from manufacturing, logistics, or retail environments can drive simulations, predictive models, and anomaly detection.

- Tight coupling with Azure AI workloads: Data can be automatically routed into models built or tuned in Azure OpenAI, minimizing the latency between insight generation and decision execution.

- Cross-domain interoperability: Microsoft Fabric acts as a bridge between different data silos, from ERP systems to IoT pipelines, helping unify fragmented datasets into an AI-ready fabric.

By aligning data pipelines with AI workflows, Fabric allows developers and data scientists to build, test, and deploy models with reduced friction and maximum context.

Azure Cosmos DB

Azure Cosmos DB, Microsoft’s globally distributed NoSQL database, plays a critical role in real-time data sync, sensor stream processing, and cloud-based integrations. For use cases such as agentic AI, where autonomous systems must react to live data, Cosmos DB enables millisecond-level reads and writes across globally scaled infrastructures.

When paired with digital twin models, companies can simulate complex systems, from factory floors to supply chains, and continuously refine those models based on data stored in Cosmos DB. These simulations can then be used by agentic AI to make recommendations, execute autonomous tasks, or trigger downstream workflows.

GitHub Copilot & AI-augmented development

The integration of GitHub Copilot and Copilot Tuning into the Microsoft ecosystem transforms how developers build and deploy software. Beyond writing code, Copilot embeds full AI agents into the development environment.

Key advancements include:

- Copilot tuning: Allows enterprise users to adapt models like GPT‑5 to their internal documentation, codebase patterns, and business logic. It reduces model hallucination and increases developer trust;

- Embedded AI agents in CI/CD workflows: With GitHub Actions, AI agents can be embedded into pipelines to review PRs, generate changelogs, or identify security vulnerabilities;

- Multi-agent coordination for devops tasks: Orchestrated through tools like Azure Logic Apps or LangChain, agents can manage dependencies, check test coverage, and update environment configs, acting as a virtual dev team assistant.

This is central to Microsoft’s vision for AI operationalization where developers, not only data scientists, can bring AI models into production in context-rich, version-controlled environments.

Security & compliance

As AI-powered tools become deeply integrated into cloud environments, ensuring trust, transparency, and regulatory compliance becomes fundamental. Azure AI has introduced security frameworks that address risks specific to AI model development, content generation, and cross-system integrations.

AI Red Teaming Agent & PyRIT framework

One of the most notable advancements in Azure AI Security is the launch of an automated AI Red Teaming Agent, a solution designed to simulate adversarial attacks against large language models. This agent evaluates vulnerabilities such as prompt injection, jailbreak attempts, data leakage, or model drift.

It is tightly integrated with Microsoft’s PyRIT (Python Risk Identification Toolkit), a framework released in 2023 to standardize risk evaluation across AI models. With PyRIT, companies can:

- Simulate unsafe queries to assess how models like GPT‑5 respond under adversarial pressure;

- Monitor for response inconsistencies or unintended disclosures in fine-tuned Azure OpenAI models;

- Run continuous automated scans as part of DevSecOps pipelines, especially for high-risk use cases like legal document generation, healthcare summaries, or CRM data synchronization.

The AI Red Teaming Agent supports a growing library of risk profiles aligned with Azure AI Compliance standards, including those outlined in ISO/IEC 42001:2023 and NIST’s AI Risk Management Framework.

Content safety for text & image outputs

Another critical component of Azure AI Security measures is the Content Safety feature. A real-time monitoring system that evaluates both text and image outputs generated by Azure OpenAI and integrated AI agents.

Key capabilities include:

- Toxicity, hate speech, and sexual content detection: Outputs are analyzed using multi-language classifiers trained on sensitive content taxonomies;

- Inline response blocking: Potentially harmful outputs are filtered before being surfaced to users or sent to downstream applications;

- Audit logging: Every flagged interaction is logged, allowing teams to meet data security and compliance standards.

These mechanisms are particularly critical when AI systems are embedded in customer-facing apps, such as user-faced chatbots, virtual sales agents, or content generators connected to cross-platform integrations. Content safety ensures regulatory adherence, brand integrity, and user trust.

Strategic considerations

Deploying Azure AI in 2025 demands a strategy that aligns business objectives with the evolving capabilities of Microsoft’s AI ecosystem. With the increasing complexity of models, tools, and deployment options, decisions should be based on two pillars: intelligent model selection and sustainable operationalization.

Model selection

Azure’s model library has expanded significantly, including GPT-5, Grok 3, open-source LLMs (like Falcon, LLaMA 3, and Mistral), as well as fine-tunable proprietary models.

Choosing the right model means navigating several factors:

1. Task specificity

- For code synthesis, Codex or lighter GPT variants are enough when embedded via GitHub Copilot.

- For multi-domain reasoning, strategic planning, or customer interaction, more capable models like GPT‑5.

- For retrieval-augmented applications, pairing smaller models with Azure AI Search and agentic retrieval outperforms large-scale LLMs in latency and interpretability.

2. Data sensitivity & privacy

Projects dealing with confidential data (healthcare, legal, HR, or government) should avoid shared model hosting. Azure’s compliance frameworks and private deployment options, including Confidential Compute enclaves and network-isolated AI endpoints, ensure enterprise-grade privacy.

3. Performance vs. cost

While GPT‑5 offers unmatched depth, its costs can be prohibitive. Azure enables users to benchmark latency, token throughput, and cost per 1K tokens across models, with visual dashboards available in Azure OpenAI management console.

Microsoft’s Azure AI model guidance offers comparative metrics on performance, latency, and token limits.

4. Customization

Teams looking to embed domain-specific logic or language must assess whether the project warrants:

- Prompt engineering (low-effort, fast iteration);

- System-level instruction tuning (via Copilot Tuning);

- Lightweight fine-tuning (e.g., LoRA/QLoRA);

- Full-scale training using enterprise data (requires budget, infrastructure, and MLOps).

Operationalization

Businesses need repeatable, observable, and governed pipelines to deliver long-term value. Tools like Microsoft Fabric and Azure Monitor, provide the foundation for enterprise-grade applications.

1. Versioning & experimentation

Within Azure AI Foundry, developers can:

- Track prompt changes and associated outputs;

- Tag models by business function or department;

- Use model registries to enforce deployment of approved builds.

This prevents drift and enables regression testing on prompt or model changes.

2. Deployment flexibility

Azure supports multiple serving environments:

- Public hosted endpoints (shared infrastructure);

- Private endpoints within Azure VNet;

- Edge deployment via containers or Azure Stack Hub.

This allows teams to align deployment strategy with latency, compliance, and data locality needs.

3. Monitoring, logging, and feedback loops

Operational maturity includes:

- Real-time analytics on latency, response quality, and token usage;

- User rating systems for prompt output validation;

- Human-in-the-loop escalation for sensitive use cases (e.g., legal document review).

Tools like Azure Monitor, Application Insights, and Power BI dashboards help maintain visibility over business-critical AI workflows.

Strategic recommendations

To avoid common failure points, our Microsoft certified developers recommend several best practices:

- Start small with a high-impact use case (e.g., document summarization or code generation) to build organizational trust;

- Use sandboxed tiers (e.g., developer-friendly tiers in Foundry) for R&D before scaling;

- Define governance boundaries early, including what level of control humans must retain over AI decisions;

- Invest in education: Microsoft Learn, FastTrack for Azure AI, and the Partner Training Center provide ongoing support for upskilling internal teams.

Conclusion

Azure AI has grew into a powerful, enterprise-ready ecosystem in 2025, bringing together model orchestration, agentic reasoning, and deep integration with the broader Microsoft stack. For businesses looking to modernize their operations, these tools offer a way for intelligent automation, stronger data alignment, and faster development cycles.

With practical experience and Microsoft-certified expertise, we help teams align Azure AI capabilities with real-world business priorities by choosing the right model, designing agent workflows, or ensuring governance across cloud-native systems.

If you are exploring how to integrate Azure AI into business workflows, we’re here to help!